Greedy Agents and Interfering Humans

This is the web site introducing a new media art project by Tatsuo Unemi, Daniel Bisig, and Philippe Kocher. The animated symbol at the left side of the title is the logo of project 72.

Each person was born with his/her own genetic traits that affects his/her life. At the same time, the environments of family and social circumstances are also undoubtedly influencing all the aspects of what he/she does in the life. Personality is shaped from internal state, external situation, and events happen between them.

Reinforcement learning (aka operant conditioning) is a paradigm of animal learning researched for more than one hundred years in the fields of psychology and ethology. It is a powerful framework to explain how individual behavior is modified through his/her experience. Intuitively saying, we do an action more if it seems to result better, and we do it less if the result would be miserable. Memory helps the prediction of its action's result by itself. That means we learn therefore we have hope and fear.

Information flow of reinforcement learning.

Edward L. Thorndike [1] initiated scientific approaches to these phenomena in a context of social psychology under the name Law of effect in late of 19th century, and some decades later Burrhus F. Skinner [2] organized more rigid experiment from a standing point of Behaviorism using pigeons and rats. These animals can change their behavior so that they get more positive results (feeds) and less negative results (electric shocks). His consequences are reliable enough by the support of statistical analysis. The figure illustrates the information flow of reinforcement learning. To avoid confusion between sensed signal and its interpretation as reward or punishment, this diagram places Evaluation module inside of the learner.

In 1980s, thanks to the technological improvement of electronics, we obtained a powerful machine that can calculate and store enough amount of data to realize practical applications of Artificial Intelligence (in classic meaning). It also meant the era had come to challenge on flexible adaptation by the machine inspired from human intelligence. Richard S. Sutton and Andrew G. Barto [3] leaded research activities on reinforcement learning by the machine from early 1990s, organizing academic workshops and editing some books. A project member, Tatsuo Unemi, also joined these activities and published some papers such as [4]. AI is useful not only for engineering (weak AI) but also for psychology by trying to build a real intelligent machine (strong AI). Even though it might be extremely difficult to build a machine that everyone recognizes intelligent as humans are, the fruits of such researches are often suggestive to consider what human is. Recent researches on reinforcement learning is also in the current movement of Deep Learning, that enable to apply it to more complex environment and action set for both more realistic animal learning models and practical engineering applications.

The installation of this project consists of a visualization of reinforcement learning and a physical audio-visual environment the visitors are invited to be involved in.

The grid world as a testbed of Q-Learning.

The visualization part employs a simple classic framework of Q-Learning [5] for navigation in a small grid world. The world is nine by six two-dimensional square lattice, containing 54 cells in total. The learner is located at one of those cells and it knows where it is positioned, but some are occupied by obstacles that prevents the learner from getting there in. The possible actions are to move up, down, left and right in each single step that will position it to the neighbor cell if possible. If the destination cell is beyond the border or occupied by an obstacle, it stays at the same position. There are two special cells named Start and Goal. The learner starts from Start cell and it gets reward only when it reaches Goal cell. The learner is forced to return back to Start after reaching Goal. The performance of learning is measured by how often it gets at Goal. In other words, the task is to find shorter path from Start to Goal. Along the progress of learning, the learner revises its memory that tells how hopeful to take a specific action at a specific position. Starting with a settings of memory of same values greater than zero for every position, the learner prefers to explore unknown position and action because it gets disappointed as no reward come before it arrives at Goal. Once it reaches the Goal, the value is gradually propagated backward from the Goal to positions connected by an action following the learner's experience. To accelerate the propagation, a reflection mechanism is embedded by imaginary retrial of past experience randomly chosen from recent some hundreds steps. This is similar to Dyna architecture proposed by Richard S. Sutton [6].

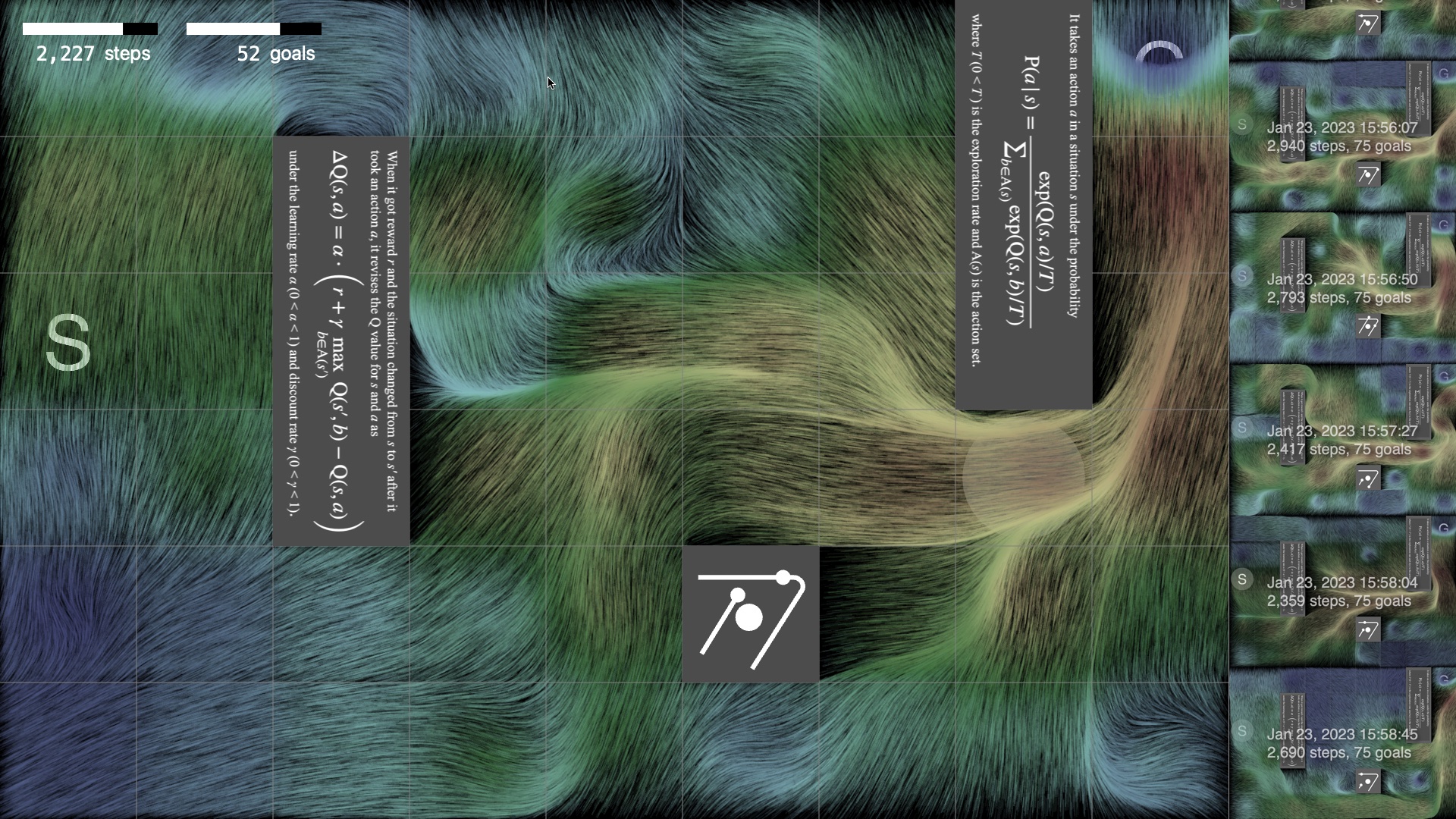

An example of screen shot of visualization.

Click the image to see the larger version.

Click here to watch video.

Such memory can be interpreted as a map of vector field in the grid world representing the recommended directions the learner should take. The vector for each cell is calculated as the summation of four vectors each of which corresponds to the action's direction and hopefulness. The visualization is implemented by the animation of particles flow reflecting this field. Some hundred thousands of small line segments are moving pushed by the interpolated force of the vector field. Each particle is drawn in color indicating the moving speed.

In the simulation's run, a single learning process stops when a prefixed number of steps or goals passed, the world image with particles is added to the list of images arranged in the right side of the screen, and then repeat the process again in arbitrary times. The visitor can see the results of past five processes in the image list that shows the adult personality of learners are different even if the original environments and abilities are exactly same.

- Thorndike, E. L. (1898) Animal Intelligence: an Experimental Study of the Associative Processes, The Psychological Review: Monograph Supplements, 2(4), i–109.

- Skinner, B. F. (1953) Science and Human Behavior, New York: MacMillan.

- Sutton, R. S. and Barto, A. G. (1998, 2nd ed. 2018) Reinforcement Learning: An Introduction, Cambridge, MA: MIT Press.

- Unemi, T., et al (1994) Evolutionary Differentiation of Learning Abilities - a case study on optimizing parameter values in Q-learning by a genetic algorithm, in Proceedings of the Fourth International Workshop on the Synthesis and Simulation of Living Systems (Artificial Life IV), 331-336, MIT Press.

- Watkins, C. J. C. H. (1989) Learning from Delayed Rewards (Ph.D. thesis). University of Cambridge.

- Sutton, R. S. (1990) Integrated architectures for learning, planning, and reacting based on approximating dynamic programming, in Proceedings of the Seventh International Conference on Machine Learning, 216-224, Morgan Kaufmann.

Publications and Exhibitions

- Unemi, T., Kocher, P., and Bisig, D.: Greedy Agents and Interfering Humans, xCoAx 2023 10th Conference on Computation, Communication, Aesthetics & X, pp. 363–367, 2023.xCoAx.org, Weimar, Germany, July 5–7, 2023. DOI 10.34626/xcoax.2023.11th.363

- Unemi, T., Bisig, D., and Kocher, P.: Greedy Agents and Interfering Humans – An artwork making humans meddle with a life in the machine, ALIFE 2023, Sapporo, Janan, July 24–28, Paper No: isal_a_00625, 36; 3 pages, Published Online: July 24 2023, DOI 10.1162/isal_a_00625 , Slides in PDF.

- Unemi, T., Kocher, P., and Bisig, D.: Interaction with a Memory Landscape, GA 2023 26th Generative Art Conference, pp. 131–136, Rome, Italy, December 11–13, 2023.

Video

- Interaction and Sonification recorded in xCoAx 2023 in Gallery Eigenheim, Weimar, Germany, July 5, 2023 (Tentative).